Introduction

In my previous two posts I introduced the Cloud based data broker technology ERDDAP and demonstrated how one can use it to obtain geo-spatial scientific environmental data:

- Access sensor data on an buoy located in the Irish Sea .

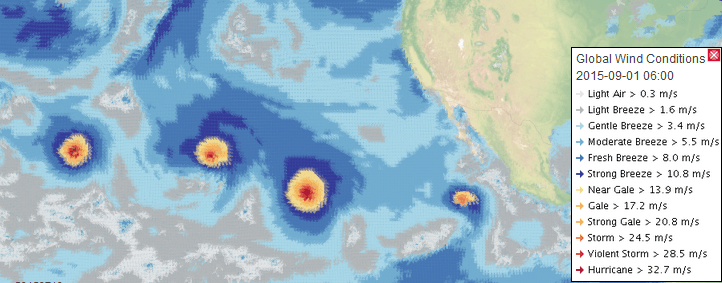

- Get and display weather forecast data from the Global Forecast System (GFS).

The use of data brokers to unify data catalogues is an approach taken by both the National Oceanic and Atmospheric Administration of the USA (ERDDAP) and the intergovernmental Group on Earth Observation (GEO Discovery and Access Broker). In this post I discuss the potential impact of this type of technology.

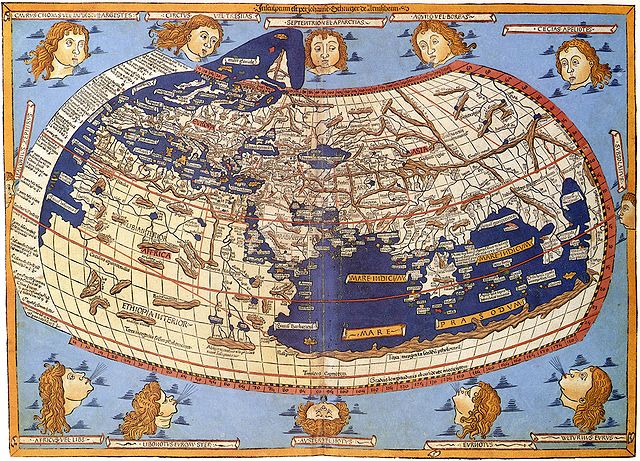

The Digital Earth

In 1998, the vice president of the United States, Al Gore, put forward the concept of a Digital Earth that would display geo-referenced data and would be connected to the world’s digital knowledge archives. He recognised the potential of a Digital Globe to address Environmental and Climate Changes issues, among others. This was not an entirely new idea, in 1962 Buckminster Fuller had proposed the idea of the Geoscope, a physical globe 61 metres in diameter that would be connected to computers containing both historical and current data and would visualise global patterns derived from such data.

Information technology in 1962 was not advanced enough for a Geoscope, however by 1998 information technology had almost advanced enough to make Al Gore’s vision for a Digital Earth a possibility. At the time he identified 6 technologies that needed to improve in order for a Digital Earth to work:

- Computer Science: Better modelling and simulation to give us new insights into the data that we are collecting about our planet.

- Mass Storage: Biggest consumer hard drives in 1998 was 10 GigaBytes. Now through Cloud technologies we have access to enormous amounts of data storage.

- Satellite Imagery: Dramatic improvements needed. In recent years there has been a large improvement in spatial and temporal resolutions, in addition much Earth Observation data are now published under Open Data licences .

- Broadband Networks: Broadband is now almost everywhere with mobile broadband both cheap and abundant.

- Interoperability: Interoperability that enables remote access to data silos. Now reaching maturity.

- Meta data: Standardisation of data about data (metadata). Now reaching maturity.

Such has been the pace of information technology progress that the first four of the above points rapidly advanced to allow technologies such as Google Earth (launched 2005), and Bing Maps (launched 2010) to come into existence.

The final two technologies, interoperability and metadata, are now reaching maturity. Advancements in these technologies have been led by a growing interest in data science, the adoption of Web architectures (Cloud computing etc.) and by major efforts led by entities such as the European Union, the USA and the UN to improve the sharing of all types of Earth Observations (satellites, terrestrial sensors and models).

Spatial Data Infrastructures

By its very nature Earth observation data has a geographic component and a key strategy to promote and share data has been the development of Spatial Data Infrastructures (SDI). At its simplest an SDI is an infrastructure of geographic data, metadata and tools that utilise an information technology infrastructure to use spatial data in an efficient and flexible way. The provision of SDI for the sharing of EO and environmental data is a policy followed by the EU, USA and the UN (and many others!):

- Infrastructure for Spatial Information in the European Community (INSPIRE) Directive (2007/2/EC). This addresses spatial data themes needed for environmental applications and strives to ensure that SDI infrastructure of member states are usable at a transboundary context.

- National Spatial data Infrastructure (NSDI) in the United States. United States Office of Management and Budget (OMB) Circular A-16 (2002).

- UN Global Geospatial Information Management (UN-GGIM) aims at playing a leading role in setting the agenda for the development of global geospatial information.

- Earth Observation’s System of Systems (GEOSS), a pan national organisation dedicated to improving accessibility to Earth Observation data through the development of a System of Systems.

Most recently there has been a wide spread adoption of Open Access or Open Data policies for environmental and Earth Observation data. With these developments we are seeing a paradigm shift towards a data-centric Environmental Observation Web, where data is semantically enriched thus enabling the consumption, production and reuse of environmental observations in cross-domain applications. We are also seeing the proliferation of data portals and catalogues:

- http://www.geoportal.org/ : The Group on Earth Observations portal (GEOSS).

- http://marine.copernicus.eu/ : The EU Commission portal for marine modelling data.

- https://www.data.gov/ : The Open Data portal of the US Government.

- https://scihub.copernicus.eu/dhus/#/home : The Open Access data portal of the European Space Agency.

The proliferation of these portals leads to a problems of Data Discovery, in an ideal world one should be able perform machine to machine queries and data downloads to these data portals. These portals do not have uniform application programmers interfaces (APIs) or file formats. So the challenge is how do we unify these resources?

Data Brokers – Explanation

An approach taken by NOAA is to use brokering technology to aid data discovery and sharing. ERDDAP (from NOAA) is an Open Source data brokering technology developed in Java as a web application. It is hosted in a Tomcat web server and is both a message broker and data mediator:

- Message Broker: It receives data requests as an HTML string which is then converted to the native protocols of the data server containing the desired data.

- Data Mediator: Upon receiving data requested from a remote server it is converted into the formatted requested by the client.

ERDDAP is way of unifying dispersed data silos/servers. It is middleware that sits between the client (human or computer) and the dispersed data servers. It merges the catalogues from remote data, local data and remote catalogues into one cohesive catalogue. Clients can use this catalogue to query and download data from the multiple of sources. There is no need for the client to know where the data is physically stored, so long as ERDDAP knows then the data is accessible.

Data Brokers and Digital Earth/Globe

So how do data brokers fit with the Digital Earth concept? In tandem with these improvements in data discovery and data sharing has been a shift in the general computing domain to Cloud Based technologies and the WEB based Application Programming Interfaces (APIs). A technology like ERDDAP can act as middleware between data sources and a web based Digital Earth/Globe (or any other client), unifying disparate data silos and providing a single API familiar to software developers. Thus we can use web services to collate data from authoritative sources for analysis, interpretation and display on a Digital Globe.

For example, in the links below are connections to ocean properties as observed by different types of sensor and published on servers located in three different countries Ireland, France and the USA:

- Ireland: Ocean properties from Irish deep sea weather buoy

- France: Ocean properties from ARGOS floating buoys

- USA: Ocean properties from the Soil Moisture and Ocean Salinity satellite (a European satellite)

So as we see here we now have heterogeneous data sources accessible via a common API.

Conclusion

In the picture below we can see Argos floating buoys displayed in the Digital Ocean web portal from the Marine Institute of Ireland. The buoy data is queried using ERDDAP and then composed within the web page.

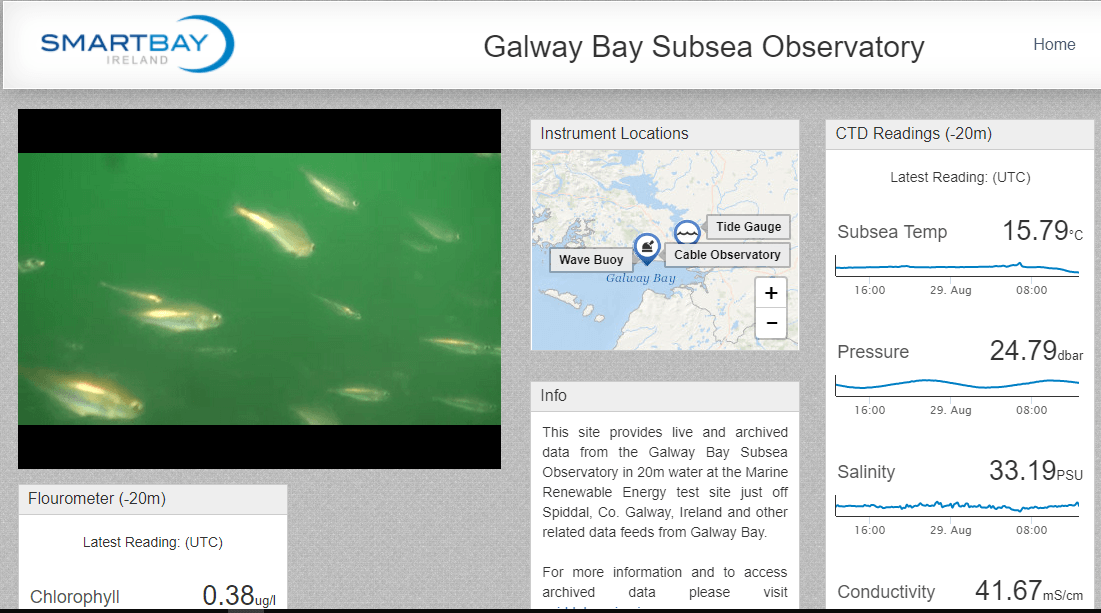

Similarly in the picture below we see a screenshot of the SmartBay dashboard from the Marine Institute, some of the data in the dashboard comes from an ERDDAP data broker.

So, I hope that I have illustrated that the problem of accessing digital knowledge archives in a standard way is solved. It must only be a matter of time before we see Digital Globes that can be composed on demand by combining simple to access data streams from sensors around the Earth?