In the past I’ve learned one thing: the only chance to do efficient geodata-projects is to have a clean data structure.

In this post I’ll show you some tools and share my thoughts about organizing (geo)data to have more efficient project-workflows. After that I would be very interested in your solutions and approaches to do smart data-projects.

1. Think about data-types, provider or/and topic, local geodata storage

At the beginning of small projects I usually make one decision. I determine the main criteria for storing my project-files. Relating to the main project-goals I either decide if I segmenting the data in their data-types (e.g. raster-datasets, vector-datasets, numeric-datasets), or store them separated by data-provider (e.g. customer-datasets, governmental-datasets), or split the datasets into topic-segments (e.g. background-map, roads, rivers, poi-datasets).

As result I have a folder-structure on my local workstation. Often, especially when projects gonna grow up, this technique runs the risk of messing up given data structures.

So bigger projects should be organized on database-level (e.g. PostGIS) to have a good overview, data-filtering possibilities and some great indexing features to improve speed of data-analyses.

2. Geodata databases

If you work on a professional level, sooner or later there is no getting around geodatabase solutions to store your project geodata. If you’re totally new to this term, I suggest to read this short article from my dear colleague Riccardo about “Geodatabases: a little insight“. If you’re interested in setting up such a geodatabase, please try this tutorial also from Riccardo: “OpenSource QGIS + PostGIS installation: “the Windows way”“.

In general geodatabases allows you to store geodata in a database format with its geometry information, add (connect) the data directly into your desktop GIS-Software and process the geodata directly on the database server. The last point is a very powerful one. With e.g. SQL-queries you are able to select your data needs directly from the source and save them as intermediate datasets. Of course you can perform really smart requests and get out final results out of the database.

From my point of view you have many advantages with working on a geodatabase-level. The biggest disadvantages are the hard learning curve to handle database requests and the database configuration in advance.

Here you are:

– PostgreSQL with PostGIS

– ESRI GIS Tools for Hadoop

– more

3. Geodata clouds

After the local- and dedicated database way of storing geodata, cloud storing is the third possibility.

Online services like GeoCloud2 or QGIS Cloud allows you to upload spatial data, manage data, edit data online and combine the data with fancy online techniques like JavaScript magic, the speed enhancement of a CDN content delivery network and so on.

Maybe this could be a good way to work together with people who havn’t got any GIS-skills, but are familiar with data manipulation within web interfaces.

Here you are:

– QGIS Cloud

– MapCentia GeoCloud2

4. So what?

- What do you think about these three possibilities of storing geodata?

- What’s your “best case” solution?

- Which tools do you use for organizing geodata in projects?

- How do you deal with participants without GIS-skills?

I’m very interested in your comments!

I like databases, within geoworld I use this approach especially if I need to analyze/process data through long period of time – for me it seems to be faster getting for example 100 rows from database (well designed db and query) than load 100 files into memory

Good summary of the current possibilities, however this decision how to store the data needs a lot more factors to be considered than the size of the project or how “professional” you are working (whatever this is). In my opinion the decision comes with the nature of the data and the project. A dbms has advantages if the data is very large or needs to be joined very often. Another advantages are the concurrent access of multiple users, finer granularity of access permissions, methods for preventing data loss, better handling of (automatic) inserts of new data and/or updates and better… Read more »

I’ve been using Geogig as a version control/storage solution for small projects. I push it to a remote repository for back up on my personal sever as well. I like Geogig for the version control system however it is limited to the datatypes in can store and I mostly store small data sets. Haven’t tried it with large data sets yet. It also works with PostGIS.

Very interesting. Would you like to write a little GeoGig description for digital-geography.com?

I’ll PM you Jakob.

Where?

I sent it to info@digital-geography.com.

Hi, I’m the creator of MapCentia GC2. One of the main reasons to create GC2, were to flatten the learning curve for among other things PostGIS, so that “ordinary” GIS users can utilise the technology. GC2 is used today for both large and small projects. An example is a municipality, which for each small project creates a PostgreSQL schema where in all related data is stored.

Most organizations that use GC2, have their own local installation of the system.

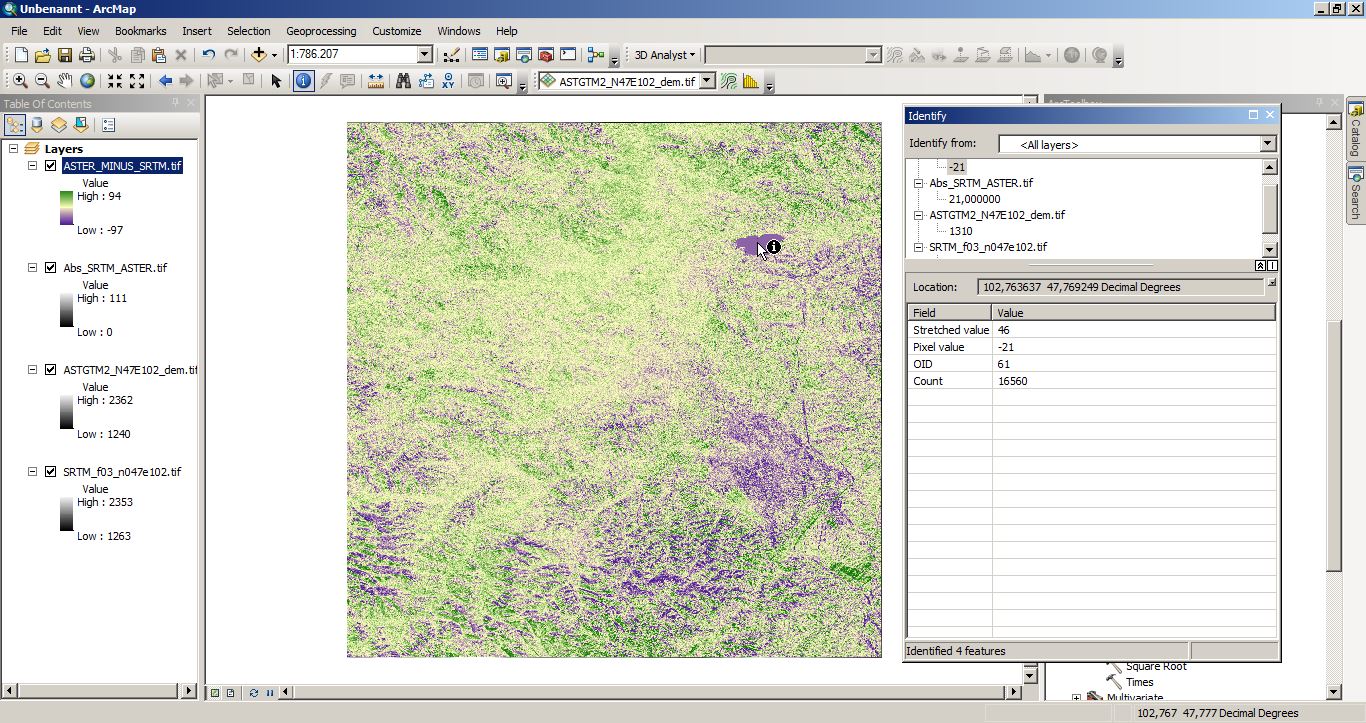

these are good points, but things get somehow complicated when dealing with raster datasets of projects. A Landsat-8 OLI image might be used in combination with UAV, cadastre maps, land cover maps for a given project, and other projects might use one or several same products. In addition, some products have processing levels that are important to track or search. So in my experience the effective storing and indexing of rasters is an issue

Do you have any approaches to deal with storing big raster datasets?

We are currently upgrading our SDI and spatial database, we work with ArcSDE and found the mosaic dataset to generally work well for our needs. However, we found the indexing and cataloging to be the larger issue, especially when the nature of the projects are diverse. So the answer is that I am still experimenting to conclude on a best-fit approach. Would be nice to see some robust opensource solutions on this matter.

Great. Yes, this would be my next question. Are there any known robust open source solutions for this approach?

A very important topic to discuss and think about. For my part, the storage place, as in files or geodatabases is in large part inconsequential or academic (not really, but to underscore the main point…). Our office has been using geospatial data for 2 decades. In that time we’ve tried a number of different organizational schemes, ranging from well thought out, meticulously planned and modelled to shoot from the hip and just get the job of the moment done. All of them — all — have been wrong. Wrong in the sense that each create new friction points just as… Read more »